How to Create PagerDuty Alerts

Fix Inventory constantly monitors your infrastructure, and can alert you to any detected issues. PagerDuty is the de-facto standard to escalate alerts. In this guide, we will configure Fix Inventory to send alerts to PagerDuty with a custom command.

Prerequisites

This guide assumes that you have already installed and configured Fix Inventory to collect your cloud resources.

You will also need a valid routing key for your PagerDuty account.

Directions

-

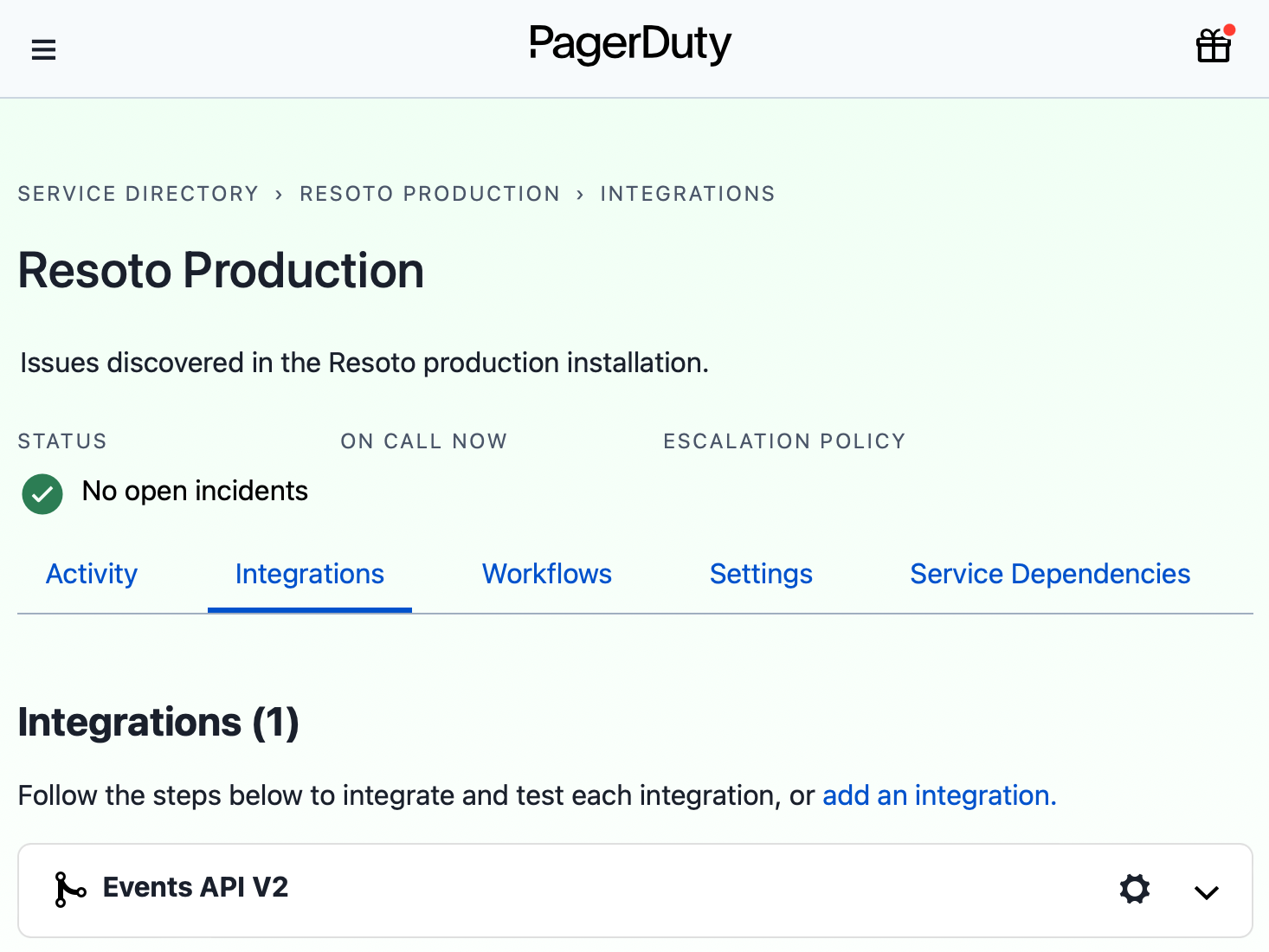

Open the relevant service in PagerDuty and click Integrations. Then, click the Add new integration button.

-

Expand Events API V2 and copy the revealed integration key:

note

noteWe will refer to this key as the "routing key" for the remainder of these instructions.

-

Open the

fix.core.commandsconfiguration:> config edit fix.core.commands -

Add the routing key copied in step 2 as the default value of the

routing_keyparameter in thepagerdutysection. This will allow you to execute thepagerdutycommand without specifying the routing key parameter each time.infoThe

pagerdutycommand has the following parameters, all of which are required:Parameter Description Default Value summaryAlert summary routing_keyEvents API V2 integration key dedup_keyString identifier that PagerDuty will use to ensure that only a single alert is active at a time sourceAlert source Fix InventoryseverityAlert severity ( critical,error,warning, orinfo)warningsourceLocation of the affected system (preferably a hostname or FQDN) Fix Inventoryevent_actionAlert action ( trigger,acknowledge,resolveorassign)triggerclientName of the monitoring client submitting the event Fix Inventoryclient_urlURL to the monitoring client https://fixinventory.org/webhook_urlPagerDuty events API URL endpoint https://events.pagerduty.com/v2/enqueue -

Define the search criteria that will trigger an alert. For example, let's say we want to send alerts whenever we find a Kubernetes Pod updated in the last hour with a restart count greater than 20:

> search is(kubernetes_pod) and pod_status.container_statuses[*].restart_count > 20 and last_update<1h

kind=kubernetes_pod, name=db-operator-mcd4g, restart_count=[42], age=2mo5d, last_update=23m, cloud=k8s, account=prod, region=kube-system -

Now that we've defined the alert trigger, we will simply pipe the result of the search query to the

pagerdutycommand, replacing thenamewith your desired alert name:> search is(kubernetes_pod) and pod_status.container_statuses[*].restart_count > 20 and last_update<1h | pagerduty summary="Pods are restarting too often!" dedup_key="Fix Inventory::PodRestartedTooOften"If the defined condition is currently true, you should see a new alert in PagerDuty.

-

Finally, we want to automate checking of the defined alert trigger and send alerts to PagerDuty whenever the result is true. We can accomplish this by creating a job:

> jobs add --id alert_on_pod_failure--wait-for-event post_collect 'search is(kubernetes_pod) and pod_status.container_statuses[*].restart_count > 20 and last_update<1h | pagerduty summary="Pods are restarting too often!" dedup_key="Fix Inventory::PodRestartedTooOften"